Hier ein Hinweis auf einen hochinteressanten Artikel über das systemische Problem, die Werbewirkung von Onlinewerbung und Targeting nachzuweisen. Sein Titel: “The new dot com bubble is here: it’s called online advertising”

Effekte – welche genau? KPI aus Google und Facebook erzählen nicht die ganze Geschichte

Man kennt das: man nutzt Targeting und schon schiessen alle Effizienz-KPI nach oben. Mehr Sales fürs gleiche Mediageld. Oder auch mehr Registrierungen oder Seitenbesuche. Aber woher kommen diese Steigerungen? Was ist Ihre Ursache? Worüber machen die KPI aus den Black Boxes von Google und Facebook überhaupt eine Aussage? Diese Frage stellten sich Forscher an der Kellogg School of Management und werteten die Datensätze von US-Werbungtreibenden mit wissenschaftlich-statistischen Methoden aus. Herausgekommen ist eine Studie mit dem Titel: A Comparison of Approaches to Advertising Measurement: Evidence from Big Field Experiments at Facebook.

Mit Big Data-Analysen der Werbewirkung auf der Spur

Es wird schwierig sein, gegen die Studienergebnisse zu argumentieren, denn die Datenbasis ist groß, sehr groß – also valide. Ausgewertet wurden Experimente von 15 US Werbungtreibenden, mit in Summe 500 Millionen Usern und 1,6 Mrd. Ad Impressions. Diese Daten machten eine Aussage über die Auswirkungen von Online-Werbung auf Werbeziele wie Direktverkauf und Website-Besuche. Dazu wurden in verschiedenen Branchen A/B Tests durchgeführt, mal mit, mal ohne Werbung. Das Kernergebnis: es gibt 2 Arten von “Werbewirkung”, in beiden Fällen, führt sie dazu, dass User genau das tun, was die Werbung beabsichtigt, nämlich z.B. zu kaufen.

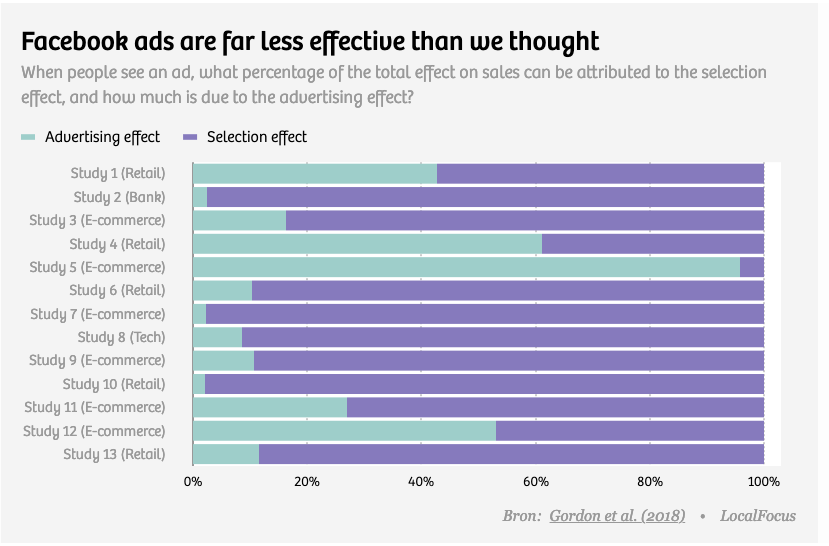

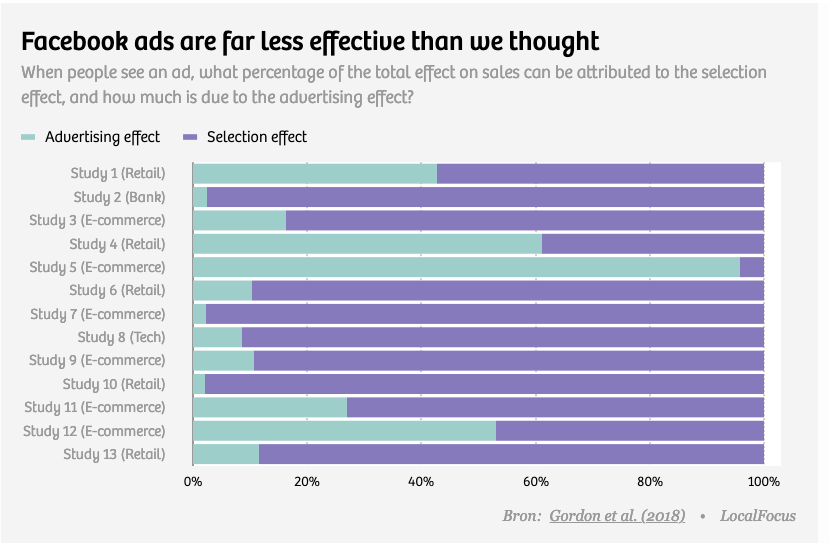

Werbeeffekt oder Selektionseffekt? KPI machen Aussagen 2 Arten von “Wirkung”

Zum einen gibt es die gewünschte Werbewirkung. Werbung erzeugt Abverkäufe, die ohne Werbung nicht stattgefunden hätten. Die Kausalität ist klar: ich verkaufe, weil ich geworben habe.

Zum anderen gibt es aber die Selektionswirkung. Der Werbung erreicht User, die sowieso gekauft hätten. Die erzeugen Umsatz, der über Klick-KPI der Werbung als Leistung zugeschrieben wird, obwohl sie gar nichts geleistet hat. Beispiel Google: Man schaltet Adwords auf dem eigenen Marken-Namen, die Leute klicken auf den bezahlten Link, hätten aber auch auf den organischen Link geklickt, wenn der an erster Stelle gestanden hätte. Der Selektionseffekt bedeutet: ich bezahle für Kontakte /Kunden, die ich sowieso schon habe.

Hier eine Übersicht der Anteile “echter” Werbewirkung je Branche

Fazit: “Is online advertising working? We simply don’t know”. Wer es wissen will: man versenkt einfach mal kein Werbegeld bei den Targeting-Päpsten von Google und Facebook und steckt es stattdessen in ein (gut kontrolliertes) Experiment. digitaleexzellenz digitalblindness

Best always test – die Forschung und ihre Ergebnisse (hier als pdf: Gordon, Zettelmeyer et al. 2018)

- Es gibt eine bedeutende Diskrepanz zwischen den Ergebnissen der Experimente (A/B Tests) und den „Ergebnissen“, die zeitgleich via „KPI“ von Facebook beobachtet wurden.

- Die „Wirkung“ von Facebook Ads wird von den FB-eigenen KPI in 50% der Fälle bis zu 3-fach „überschätzt“. Eine Methode ist dahinter (noch) nicht erkennbar.

- Entdeckt wurden, im Gegensatz zur bisherigen Forschung, auch Unterschätzungen von Werbewirkung.

- Ein zuverlässiges „Modelling“ ist unwahrscheinlich. Im Gedankenexperiment wurden div. Erklärungsmodelle mit theoretischen Einflussfaktoren getestet. In mehr als der Hälfte der Experimente hätten (unbekannte) Datenbasen und (unbekannte) Erklärungsvariablen so „stark“ (valide) sein müssen, wie die Top-Variablen im echten Experiment.

- Eine „richtige“ Messung wird ebenso wenig zu zuverlässigen Prognosen von Werbewirkung führen.